📡 Real-Time Data Processing with Kafka, AWS Cloud, MySQL, and Looker Studio

📘 Project Overview

In this project, I scraped data from YouTube’s tech niche using `yt_dlp`, streamed it using Kafka, cleaned and stored it into a MySQL database hosted on AWS RDS, then visualized insights with Looker Studio.

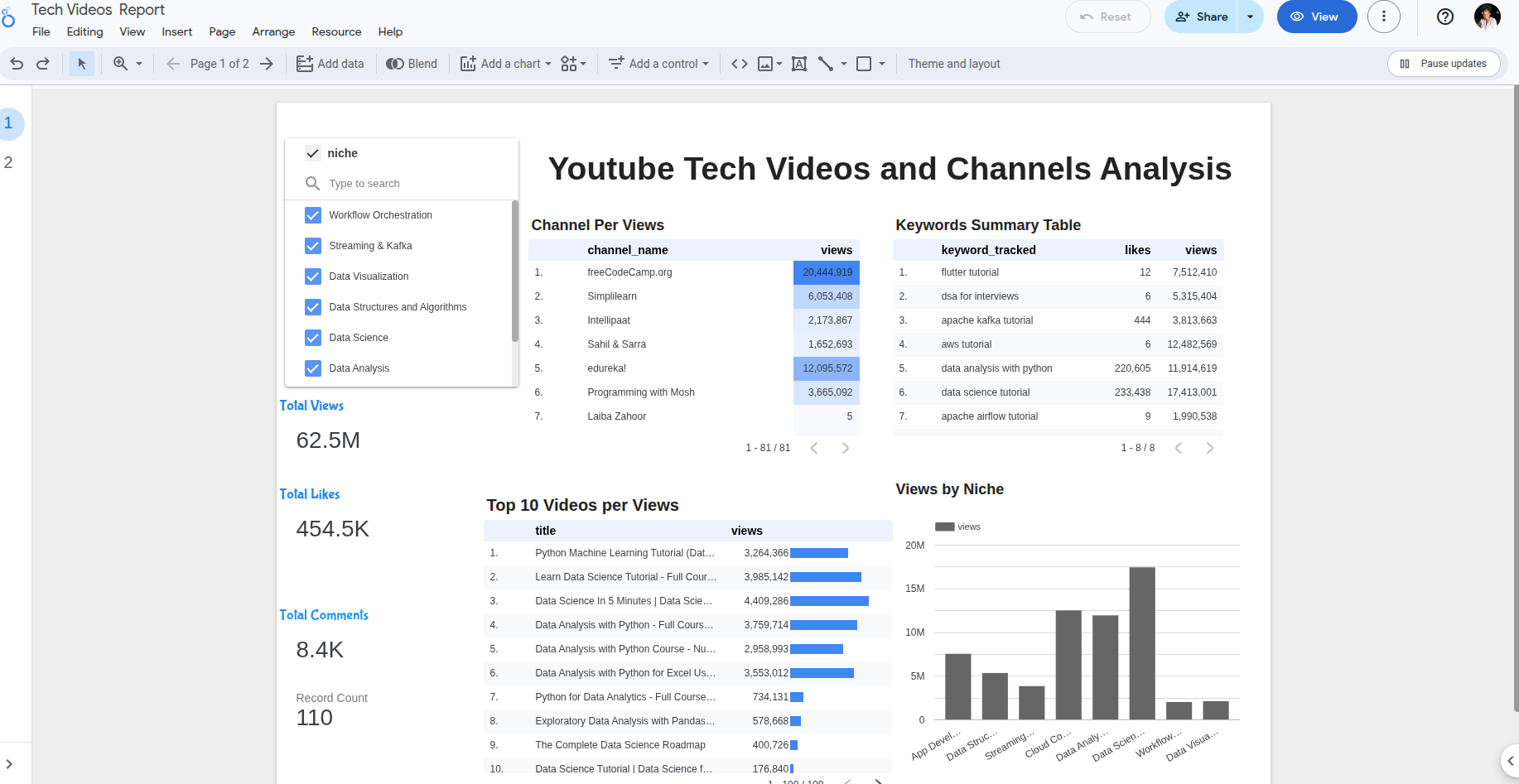

📊 What the Dashboard Shows

- 👀 Channels with the most views and likes

- 🔑 Keywords associated with high engagement

- 🧠 Niches generating the most traction

🧱 Project Structure

https://github.com/JoyKimaiyo/youtubetechvideos

├── producer.py 🚀 Scrapes & streams data

├── consumer.py 📥 Consumes and stores data

├── config.py ⚙️ DB & Kafka settings

├── requirements.txt 📦 Dependencies

☁️ MySQL Cloud Setup & Connection

AWS RDS provides scalable, secure MySQL hosting. I configured my instance via the AWS Console and connected using the MySQL CLI. This setup simplifies remote database management and scales with production workloads.

🔁 Kafka Streaming

I launched Zookeeper and Kafka to manage real-time streaming. The producer captured live YouTube metadata and sent it to a Kafka topic. The consumer subscribed to this topic, processed the data, and saved it to the cloud-hosted MySQL database.

📈 Connecting to Looker Studio

Looker Studio was used for live dashboards. I connected it to MySQL via a connector and visualized high-level metrics like total views per niche, most popular channels, and trending keywords. These insights aid in content strategy for creators and marketers.